This blog will explain what and how to hypothetically do this, and the peculiarities of creating a chatbot based on LLMs.

Note: This is an updated version of our 2023 blog post. The field of AI and LLMs has evolved rapidly since then (with new models and more efficient implementations), so we've refreshed this guide to reflect the current state of the art in 2025 while maintaining our original approach. Our solution now dramatically reduces API costs by caching responses to commonly asked questions (making website chatbots economically practical for most organizations while maintaining effectiveness).

Traditional NLP-based chatbots typically rely on rule-based systems, pattern matching, template-based responses, and neural networks trained on specific question-answer pairs. These techniques continue to work for specific applications but require either extensive datasets of question-answer pairs or human-defined rules.

In contrast, modern LLM-based approaches offer greater flexibility by leveraging general language understanding capabilities combined with contextual information. Rather than requiring exhaustive question-answer pairs for every possible query, these systems can process and reason about content dynamically when provided with relevant context.

For websites like the City of Amsterdam portal, this means we can leverage the wealth of information already published without creating a new dataset of specific questions and answers. The same approach applies to any content-rich website, from corporate documentation to e-commerce platforms.

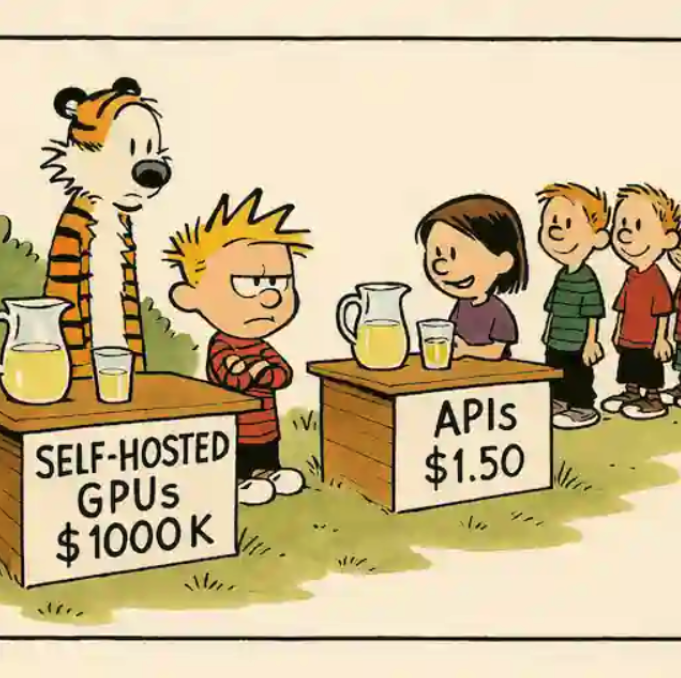

Throughout 2025, the LLM landscape has continued to evolve rapidly. With increasingly powerful models released by major AI labs and tech companies, and so when building a website chatbot, you have two main options:

After experimenting with both approaches, we've found that managed LLM services make more sense for most website chatbot projects. Think of it like choosing between generating your own electricity versus connecting to the power grid - the latter is simply more practical for most homes.

Managed APIs eliminate infrastructure headaches while providing seamless scaling, automatic improvements, and generally higher quality responses. Yes, there are trade-offs: usage-based costs, less control over the model, and data privacy considerations. But with proper implementation strategies (discussed later), these concerns can be mitigated while preserving the quality and simplicity advantages.

Self-hosting does make sense in some scenarios - particularly for data sovereignty requirements or implementing more advanced caching strategies that require access to model internals. However, just be prepared for substantial hardware investments, specialized expertise requirements, and development time spent on infrastructure rather than your core product.

As we noted in our 2023 blog, and as remains true today: "Only self-host an LLM if you really need to!"

Figure 1: Self-Hosted GPUs vs. API-Based LLM Access (Image courtesy of S. Anand)

One of the most crucial aspects of working with LLMs is effective prompt crafting. When building a website chatbot, we use a template-based approach that combines the user's question with relevant context:

Answer this question:

{question}

By using this context:

{document 1}

{document 2}

{document 3}

{document …}

The LLM doesn't need to be fine-tuned on your website's content. Instead, it uses its general language understanding capabilities to interpret the question and generate an answer based on the provided context.

To build an effective website chatbot, we need to extract and process the relevant content. Our approach involves:

Before explaining our implementation, Table 1 below highlights the landscape of varying techniques for building website chatbots.

Table 1: Key Characteristics of RAG, CAG, and Simple Caching

As we evaluated these methods, we found that none provided the ideal balance we were seeking, as each with limitations for practical website chatbot implementations. RAG requires complex infrastructure and has higher ongoing costs, CAG needs access to model internals that commercial APIs don't provide, and simple response caching lacks semantic understanding. These limitations inspired us to develop our hybrid approach. Here's how it works:

We first build and cache a searchable knowledge base:

Our approach implements RAG with multi-tier caching to improve efficiency. Unlike “true” CAG, which would require direct access to the LLM's internal key-value cache tensors (not possible with commercial LLM APIs, as we’ve noted), our system achieves performance gains by caching at the retrieval application level. We preserve processed vector embeddings and retrieval results to avoid redundant computation.

Our caching system uses simple, standard storage technologies rather than specialized vector databases. It’s important to note that while we are still using vector embeddings, we found that dedicated vector databases were unnecessarily complex for many website chatbots, and this simpler storage approach offers several key advantages:

This simpler storage and structural approach translates to lower infrastructure costs and easier maintenance compared to full vector database implementations (as we noted in our original blog, that they were probably a bit overkill).

We've found this approach particularly effective for small to medium websites (up to a few thousand pages) with relatively stable content that changes infrequently. Where it really shines is in applications with budget-conscious implementations where many users ask similar questions but still need semantic understanding, and of course, in use cases where response time affects user satisfaction. That being said, vector databases are still incredibly powerful and remain prevalent when your needs scale to billions of vectors, complex metadata filtering, or need advanced ANN (Approximate Nearest Neighbor) algorithms optimized for high-dimensional vectors.

At the core of our system lies a progressive question-handling strategy to balance speed, cost, and accuracy. The idea is to avoid immediately calling the expensive LLM API for every query when simpler, faster methods might suffice.

First, we start with an exact match approach. After normalising the question by removing stop words, stripping punctuation, and standardising case, we check if this exact question exists in our cache using a simple hash lookup. This lightning-fast straightforward method instantly returns cached answers for questions we've seen before.

That being said, of course, people rarely phrase questions identically - which is why our second tier employs similarity matching. When no exact match exists, we use Jaccard similarity to identify questions that share substantial keyword overlap. If we find a question that exceeds our 90% similarity threshold, we deliver the cached answer along with a note about the match. This maintains the speed advantage while accommodating natural language variations.

Only when these faster methods fail do we activate our third tier, which combines vector search with LLM generation. This process begins with a semantic search through our vector index to find the most relevant text chunks from the website. We then insert these retrieved passages into our prompt template alongside with the user's original question. The complete prompt goes to the LLM API, and the freshly generated answer joins our cache for future use.

Although implementing a comprehensive feedback system was out of scope for our project, we recommend adding this feature to any production deployment. A simple thumbs up/down mechanism after each response can provide invaluable data for improving your chatbot over time.

The evolution of website chatbots since 2023 has been focused not just on new capabilities, but on making implementations more practical, cost-effective, and maintainable.

What's most exciting is how accessible this technology has become—organizations of all sizes can now implement effective website chatbots without massive AI expertise or infrastructure.

If you are interested in what we can do for your company, contact us!

Analytics Engineer at Xomnia

Data Scientist at Xomnia