This blog is the fourth in a series on AI business challenges, written by Xomnia’s Analytics Translators.

In this blog, Xomnia’s Analytics Translator Jelle Stienen elaborately explains from a practical point of view the necessary input to construct an AI use case, and how to prove the value of such a use case. It’s good to take into consideration that the aforementioned points are often hard to answer, since, in general, there are a lot of influences and interferences that affect AI projects within complex organizations. Nevertheless, the approach we lay down aims to help you in successfully setting goals and measuring success in AI projects - an often overlooked, but crucial, step.

Perception of success

About a year ago, the Tokyo 2020 Summer Olympic Games took place, where over 11,000 athletes competed in 50 different disciplines. Every athlete had their own tailormade plan and goal during the years of preparation and training that precede going to the Olympics. Putting in tremendous amounts of effort and dedication for multiple years is something that can be described as the ultimate form of discipline to achieve a goal.

The first athlete had the goal of winning a gold medal, but instead won a bronze medal. When interviewed, she started crying, expressing how disappointed she was because all the effort that she put in the years leading up to the Olympics doesn't justify the outcome. She concluded that her performance was “a complete failure”.

The second athlete had changed her goal from winning a medal, to participating in an Olympic final. This adjustment in her goals made sense, following a tough year during which she endured quite some injuries. As she crossed the finish line, she thought to herself happily: “An unbelievable 4th place! This exceeds my wildest expectations.”

The two stories above demonstrate that the perception of success depends on the predefined goals that one sets for themselves. Being successful doesn't mean getting the results to a 100% or finishing in first place; it's about the accomplishment of the goal that you set, or reaching the highest achievement that your full potential can get you. This also means that the goal that you set, or what you perceive to be a success, can change over time.

We see the same in AI projects: Determining your success is based on measuring the goal that you set before the start of the project. A 2019 VentureBeat research states that 87% of data science projects never make it to production. One could argue that without making it to production, the goal set beforehand for these projects is most likely not achieved - Unless, of course, the goal is to only experiment with AI. After all, as the example above shows, what counts as failure for some, can count as success for others.

Start seeing AI as a means to an end: How to define your problem

When all you have is a hammer, everything looks like a nail. By forcing AI to be a solution for a certain problem, it's likely that you will end up with an unsolved problem. Adhere to real and carefully scoped problems and match the relevant technology to the problem, not the other way around.

Below are seven questions that we at Xomnia always try to answer as completely as possible to properly define the problem before starting an AI project:

- What is the basic question to be solved?

- What is the perspective/context of the problem?

- What is the scope of the solution space?

- What are the criteria for success?

- Who are the main stakeholders / decision makers?

- What are the constraints in the solution space?

- What are the key sources of insight of the problem?

After you’ve answered these questions with the problem owner, you can make a first assessment into what kind of solution is needed and start filling in the Use Case Canvas as discussed in the previous post in this blog series.

Types of success in AI projects

Now, we have established that we need to set goals in order to measure the success of our AI project. Next, we need to define what types of success we can have in our AI project, and what goals lead up to that success.

Begin by defining the kinds of success that you want to achieve, which, based on Critical Success Factors for Artificial Intelligence Projects, can be grouped into one of the following categories:

1) Commercial success

When talking about adding value, people refer mostly to earning or saving more money in comparison with the status quo. An example of a commercial goal is: “By automating the manual process of assessing insurance claims, we want to save up to 3 FTE, resulting in a yearly saving of 120K Euros”. Another example is: “By personalizing the recommendations given to our customers, we aim to upsell by 2 products per customer on average, resulting in a 10% increase in the profitability per customer.”

2) Contractual success

Contractual success can refer to achieving the intended outcomes, which are often agreed upon with a project's stakeholders beforehand. This can refer to commercial goals, but also other activities. For example: “We want to set up a complete cloud infrastructure to be able to develop and run machine learning models in a production environment.”

3) Ethical success

An example of an ethical success goal for an AI-project can be the elimination of the biased outcomes that can naturally happen when humans are involved in a given process. For example: “We want to support the social welfare allocation process with an explainable and transparent model.” This can also be measured in the form of fairness metrics to define as success factors.

4) Measurable success

This success category is as close as it comes to assessing the technical performance of AI models. For example, the operational improvements on, for example, logistics processes due to the implementation of an AI-driven product: “With the automation introduced, the quality score on this process, which is 65% when done by humans, goes up to 95% when using this AI model.”

5) Learning as success

Some view an AI project as a failure because its success can’t be measured based on any of the aforementioned categories. Others, however, see the process itself as a learning success that may tie together the organization's goals regarding the project in the first place. In fact, an increasing number of organizations have “experimenting with AI'' as a goal in their yearly strategies, under which they set goals such as: “This year, we want to experiment with AI by doing 3 Proof of Concepts in order to improve knowledge and experience within AI in our business.”

Xomnia’s practical approach in to achieving success

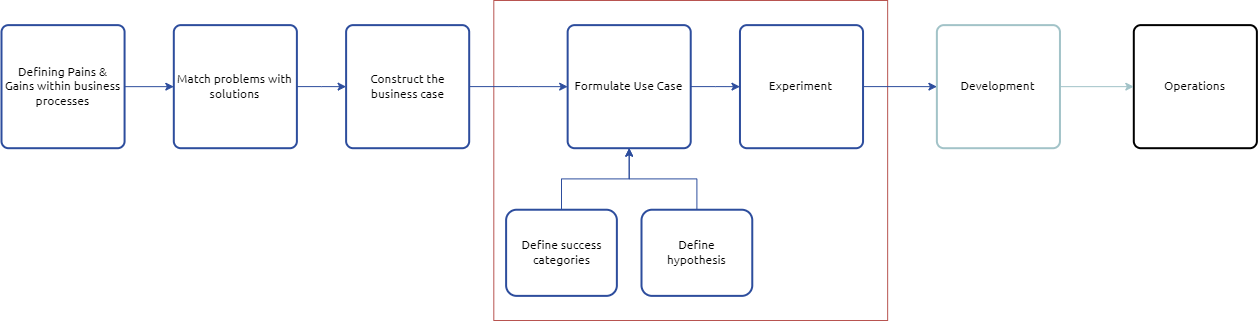

When following Xomnia’s approach to define and execute projects, the rule of thumb is to always start from the problem and never from the solution or use case. This way, you’re forced to put AI as a means to an end, and not the other way around. In this blog, we focus on the outlined part of the diagram below, which summarizes the whole journey of an AI product.

Now that, we’ve covered success categories in an AI project, we will cover next how to set hypotheses and how to experiment with them.

What is a hypothesis?

A hypothesis is a proposed explanation for something. It contains a provisional idea or an educated guess that requires an evaluation. It helps determine the statistical significance of our findings in which we test our assumptions. This means that a good hypothesis is testable; it can be either true or false. In a scientific approach, the hypothesis is constructed before any actual research is conducted. In a business environment, reality often overlaps the experiment. Nevertheless, it is important to phrase the hypothesis before the outcome of the test is known.

In Xomnia's Way of Working whitepaper, there are two types of hypotheses defined:

1) Type 1 (model performance): Hypotheses about the performance of the model itself, e.g., its accuracy, fairness, interpretability, etc. These can be monitored, evaluated and inspected by the development team (when properly defined).

2) Type 2 (solution performance): Hypotheses about the performance of the solution in relation to the stakeholder, e.g., its explainability, business impact, decision making, complementarity, acceptability, usability, etc. These should be evaluated together with the stakeholders, preferably through rapid successive prototyping and situated human-in-the-loop experimentation.

How to experiment with AI projects and what to pay attention to when doing so?

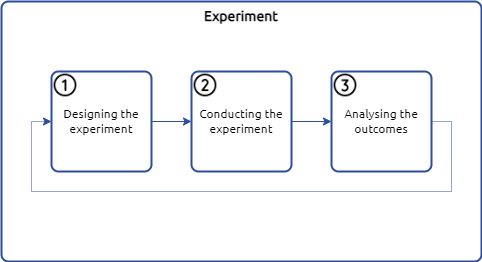

We can identify three steps in proving value in AI projects, also often referred to as experiment design:

1) Designing the experiment

The most important and cumbersome step of the process is the actual design of an experiment. We believe that having a baseline in combination with clear goals, preconditions, stakeholder/end-user involvement and documentation is crucial for a successful experiment.

Setting a baseline

First of all, you need to determine the quantified situation that you will compare your experiment to. This serves to set a baseline for your experiment. If there isn’t a model already in place, you can attempt to recreate the “scoring metric” of the existing process.

A golden rule for this is: Recalculate the scoring metric for the existing model using the same process you intend to measure your model on.

What is the experiment going to look like?

After you’ve set your baseline, it's time to actually design the experiment. Initially, you have to take the following points into consideration:

- What are the (independent & dependent) variables of my experiment?

- What is my hypothesis?

- What are my success categories? E.g.: Commercial and or measurable success

- How am I going to measure the results? What type of experiment am I going to perform? E.g. A/B testing or Multivariate tests

- When are the results a success or not? Stakeholder involvement is often needed to define this.

- Who or what are the control and test groups?

Necessary resources & tech

Next, define what kind of resources in terms of people & tech are required to conduct the experiment:

- Is the necessary data available to conduct the experiment?

- Who are the end users to test my solution? Are they also onboard and/or enthusiastic to cooperate?

- What kind of technical environment am I going to conduct my experiment in?

- Who are all the stakeholders involved?

- Do I have my success metrics & categories well defined?

- Do I have an agreed piece of documentation (most of the times agreed by relevant stakeholders) for conducting this experiment? Describing reasons for testing, persons & tech needed and the planning

Planning experiment in time

There are a few things to take into consideration when planning an experiment:

- Do we expect seasonality and how do we deal with it?

- When is the exact experiment planned and for how long?

- Are there any interferences with other experiments running?

2) Conducting the experiment

During the phase of conducting experiments, the main focus should be on monitoring if everything is going as designed.

We see three main topics that are important to monitor during the experiment:

A. End-user involvement

- Are the users still interacting as designed with the experiment?

- Is everything working well on a technical & functional level for the end-users?

- Do I have enough support capacity available in case support is needed?

B. Measuring

- Potential up/down time monitoring of system

- Are the outcomes being stored correctly?

C. Outcomes

- Is the output data complete, valid and consistent?

- Is my experiment still isolated, i.e. are the results not affected by other experiments or events that influence the data?

- Are my samples representative?

3) Analyzing the outcomes

When the experiment is finished, the goal is to spend as little as time possible on actually processing and analyzing the outcomes. The effort that you have done in the designing and conducting phase should cause you to analyze the outcomes in a swift and effective manner. Three main points in this phase are:

A. Evaluating results

- Interpreting & understanding the outcomes: For instance, by visualizing and performing scenario analysis

- Analyzing success metrics: Did we succeed?

- Evaluating the process of the experiment: What are topics suitable for improvement for next experiments?

B. Documenting results

- Storing results, code and learnings

- Formulating advice for additional actions, experiments and if relevant next steps

C. Sharing results & next steps

- Presenting back to the business stakeholders and communicate to involved end users

- Deciding on next steps

Download Xomnia's Way of Working whitepaper

—

Xomnia has a team of analytics translators (ATs) dedicated to assisting clients with the business aspects of AI challenges. They do so in several roles, such as AI Product Owner (product-focused), AI evangelist (adoption-focused), AI ethicist (responsibility-focused), or Strategic AI advisor (strategy-focused). If you are interested in how to successfully develop a data product. You can also download our whitepaper on this topic.

Would you like to learn more or have a conversation about AI business challenges at your organization? Get in touch: info@xomnia.com